Portfolio Details

Development of Object Detection System for Retail Products Using YOLOv4-Tiny Architecture

In the modern retail industry, efficient inventory management and accurate product identification are crucial for optimizing operations and enhancing customer experience. Traditional methods of manual counting and categorization are not only time-consuming but also prone to errors, leading to potential financial losses and customer dissatisfaction. To address these challenges, the integration of advanced computer vision technologies has become increasingly important.

This project focuses on the development of an object detection system for retail products using the YOLOv4-Tiny architecture. YOLO (You Only Look Once) is a state-of-the-art, real-time object detection algorithm known for its speed and accuracy. The Tiny variant of YOLOv4 offers a more lightweight and efficient model, making it ideal for deployment in resource-constrained environments, such as embedded systems or edge devices commonly found in retail settings.

The primary objective of this project is to design and implement a system capable of detecting and identifying various retail products on shelves or in storage with high accuracy and low latency. By leveraging the YOLOv4-Tiny architecture, the system aims to provide real-time analytics that can assist in inventory tracking, shelf management, and loss prevention. The project will involve dataset preparation, model training, and fine-tuning, as well as the integration of the detection system with retail software platforms.

This introduction outlines the scope and significance of the project, highlighting the need for innovative solutions in the retail sector and the potential impact of implementing an efficient object detection system. Through this endeavor, we aim to demonstrate the practical applications of deep learning in retail and explore the benefits of adopting advanced technologies for everyday business operations.

Process

- Data Collection: A dataset of images of retail products was collected from various sources, including online marketplaces and in-store captures. The dataset consisted of 106 classes of powdered milk products in both box and can formats.

- Data Preprocessing: The collected images were preprocessed to ensure consistency in size, format, and quality. This involved resizing images to a uniform size, converting them to a suitable format, and enhancing image quality where necessary.

- Data Annotation: The dataset was annotated with bounding boxes to identify and label the objects of interest, facilitating the model's learning process.

- Model Selection and Training: The YOLOv4-Tiny architecture was chosen for its proven ability to provide satisfactory object detection results. The model was trained on the preprocessed dataset for 46 hours out of a total of 111 hours.

- Model Evaluation: The trained model was evaluated on a test dataset to assess its performance. The evaluation metrics used included mean Average Precision (mAP), Precision, Recall, and F1-score.

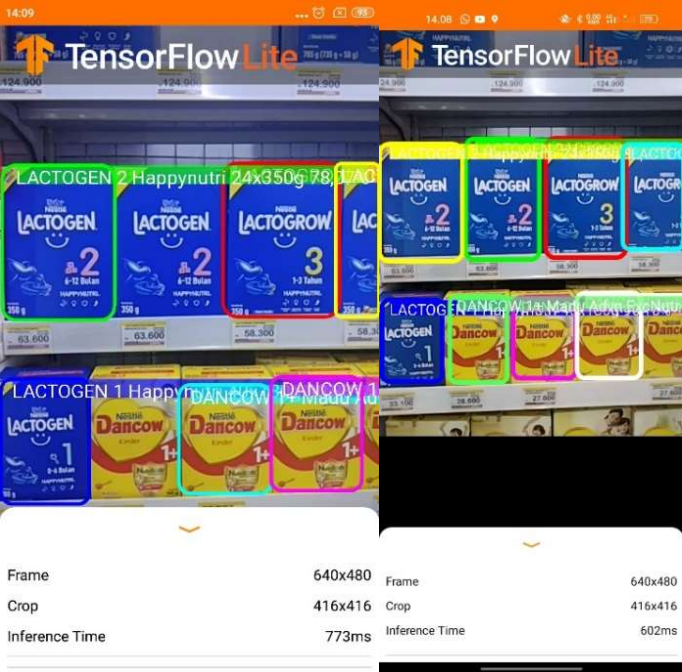

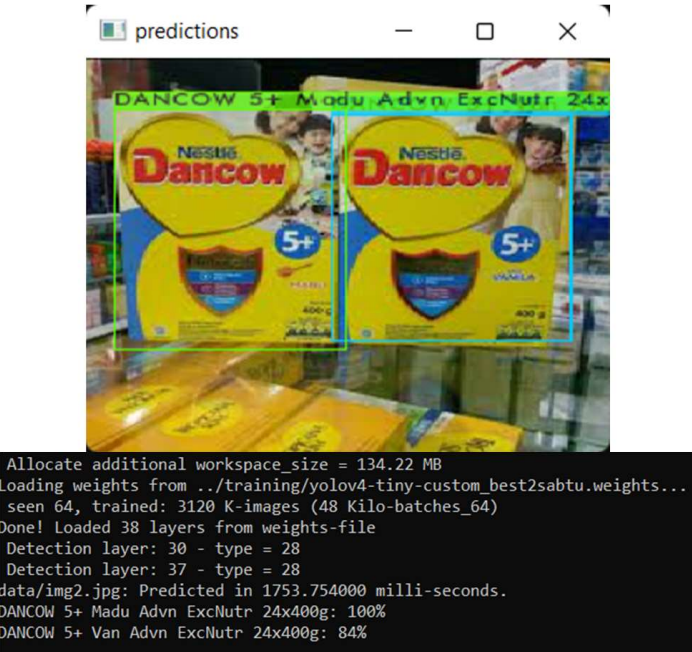

- Deployment: The trained model was deployed on an Android device to test its real-time object detection capabilities. The model demonstrated an average inference time ranging from 600 to 700 ms, with some instances requiring up to 900 ms.

Result

Based on the results of the research conducted, several conclusions can be drawn. This study successfully implemented the YOLOv4-tiny architecture model for object detection on retail products, specifically focusing on 106 classes of powdered milk products in both box and can formats. The YOLOv4-tiny architecture was chosen for its proven ability to provide satisfactory object detection results. The model was trained for 46 hours out of a total of 111 hours. The model demonstrated high performance, with a mAP value reaching 92.12%, a Precision score of 0.78, a Recall score of 0.82, and an F1-score of 0.80. Additionally, testing on an Android device showed an average inference time ranging from 600 to 700 ms, with some instances requiring up to 900 ms. This inference time is sufficient for real-time object detection as it is under 1 second.

Project information

- CategoryData Science

- ToolsPython, Jupyter Notebook, Pandas, Numpy, Matplotlib, Seaborn, Scikit-learn, TensorFlow, Keras, YOLOv4 Tiny

- Project date 19 June 2023

- Project URL https://github.com/RaihanAjah/Development-of-an-Object-Detection-System-in-Retail-Products-with-Yolov4-Tiny-Architecture